Is AI a force for good?

23 February 2024

Scott Campbell is a senior human rights officer working on digital technology at UN Human Rights. Campbell recently joined UN Human Rights Chief, Volker Türk, on a mission to Silicon Valley where Türk urged big tech, academia and State representatives to “bring human agency and human dignity back to the centre” of digital data-intensive technology. Campbell shares his thoughts on the complex and seismic changes brought on by new technology, and how human rights can be a framework to promote responsible business conduct, protect the rights of users and hold companies and States to account.

The general public’s focus on digital data-intensive technology seems to be shifting from privacy concerns to issues of misinformation and disinformation, hate speech, discrimination, some professions becoming obsolete or even outright servitude under AI’s dominion. Should we worry and, if so, what should we really be worried about?

There is very good reason to be concerned, at the same time, I do not think we should be panicked. We should have a level-headed and reflective response to the many risks of AI. We do see in the media often shifting attention and a bit of the ‘politics of distraction’ from one potential risk of AI to another. Some focus on dystopian futures, some, as we're in a year with many elections around the globe, focus on the impact of disinformation and AI. It is important for us as UN Human Rights, and for other actors engaged around digital tech, to keep focus on the risks that tech poses for a wide range of human rights and to not be distracted, but to realise that AI-enhanced surveillance capacity, for example, is a real, ongoing and growing risk. Our right to privacy is constantly at risk — our data privacy, for example, is constantly under threat through our use of social media and other digital technology.

We need to look at the risks to our human rights that digital tech and AI pose, in particular, possible discriminatory outcomes from AI in many sectors and for those already marginalized, along gender, racial and other lines. And how online content is being moderated, how social media companies are using or not using human rights as guidance to their online content policy is a focus of our ongoing work.

How can tech be a force for good? Can we rely on tech companies to do the right thing?

We can see great potential in using AI and tech: to enhance agricultural outcomes, to enhance access to education, in particular for people living with disabilities, access to health information or better medical outcomes, greater efficiency in jobs... There is a massive amount of potential. And yet, to your question, whether we should rely on tech companies to do the right thing, the answer is clear: absolutely not. We need both regulation and hard laws to carefully rein in tech companies so that technology is indeed used for good and to protect us against potential human rights harms.

At the same time, there is much that tech companies should and can do already today by applying the UN Guiding Principles on Business and Human Rights to what they are doing, including applying human rights due diligence and specific human rights impact assessments for particular policies or products as they design, develop and roll them out.

How can human rights serve as a moral compass for tech companies?

Human rights can serve as more than a moral compass. International human rights law is binding on governments and gives them clear obligations, which should translate into regulations and laws to ensure that tech companies are respecting human rights with their services and products.

The UN Guiding Principles for Business and Human Rights are non-binding principles, yet they are being increasingly incorporated into legislation and regulation, and there is a growing expectation that companies implement these principles in the way they do business. For tech companies, that means as they are designing new products, rolling them out, and responding when their products do create human rights harms, providing access to remedies.

Volker Türk speaking at a multi-stakeholder panel event on Electoral Integrity and Human Rights, co-hosted by the Norwegian Consulate General of San Francisco and the European Union, February 2024, San Francisco, USA. © OHCHR

The High Commissioner was recently in Silicon Valley where he met with State representatives, academia, researchers and heads of tech companies. What were the most important takeaways from that mission?

A fundamental takeaway from the visit was how crucial it is for UN Human Rights to step further into this space. The mission demonstrated that we have established good partnerships with civil society, academic institutions, certainly with Member States and government representatives that we met with and, importantly, with the tech companies. But it is crucial that we go much deeper and that tech companies do more. They need to apply the UN Guiding Principles on Business and Human Rights much more vigorously, and we need to have the capacity to engage more deeply, and with more companies from across the globe.

States cannot be left out of the picture. They have obligations and need to make sure that all actors, in this case tech companies, are fulfilling their responsibilities to respect human rights.

Building bridges and partnerships with academics and universities around the globe will be crucial for us going forward.

And last and far from least: our partnerships with civil society going forward. Our civil society partners demonstrated an incredible amount of expertise and knowledge during our exchanges with them, and provided very useful advice to us in engaging in Silicon Valley. The value of civil society voices in the tech and human rights space cannot be underestimated.

How does UN Human Rights work with tech companies and States to ensure that human rights are protected in the digital sphere?

Our engagement directly with companies is fairly extensive in some parts of the world. We have a major program called B-Tech, which seeks to improve the implementation of the Guiding Principles on Business and Human Rights by the tech sector. We engage directly with companies in a closed-door trusted community of practice. We are engaged largely in Europe and North America, but it is crucially important that we expand this project and engage with companies that are based in all regions of the world.

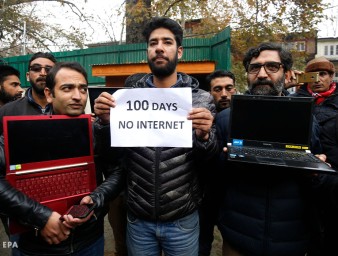

We also engage with Member States, most actively through the Human Rights Council. Our office, along with the special procedures and other human rights mechanisms, are producing reports on a large number of key issues, including the right to privacy in the digital age, internet shutdowns, crucial issues around end-to-end encryption, around surveillance, (mass surveillance and surveillance of individuals), and the use of spyware and hacking. These reports provide important recommendations for Member States and companies. We are seeing this taken seriously by Member States and also being quoted increasingly in regional courts around the world. Our work was recently quoted by in a decision on end-to-end encryption by the European Court of Human Rights, for one recent example.